Make Your Chat Context Feel Infinite: Rolling Compression for GPT & Gemini

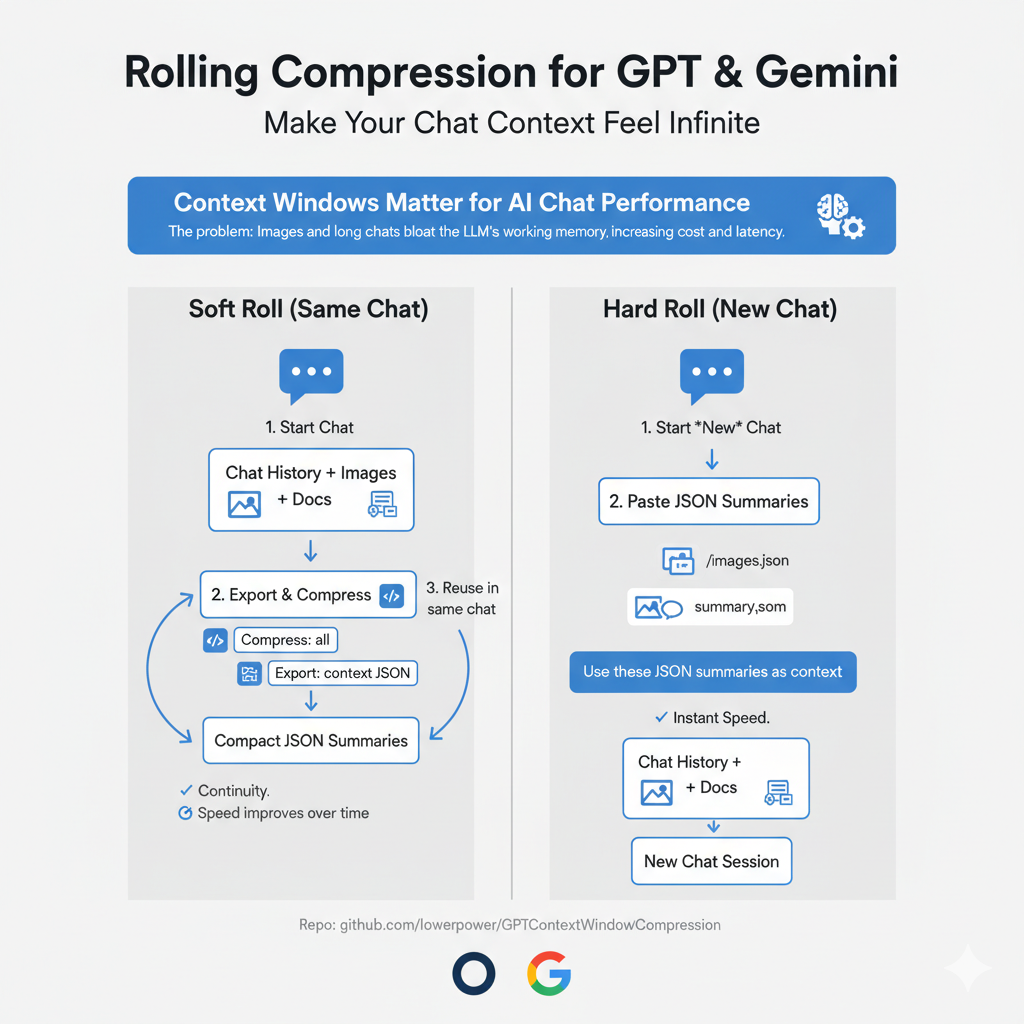

Make chats feel infinite without bigger windows: convert images/long history to lightweight JSON, then hard roll for instant speed or soft roll for continuity.

Tinkering with Time, Tech, and Culture #14

TL;DR — Images and long chats bloat context windows. GPTContextWindowCompression replaces heavy bits with tiny JSON summaries so you can roll your project forward indefinitely: stay in the same chat (soft roll) or start a fresh one (hard roll).

Repo: https://github.com/lowerpower/GPTContextWindowCompression

Why Context Windows Matter for AI Chat Performance

In conversational AI, a context window is the model’s working memory—the amount of text (in tokens) it can consider at once. Bigger windows preserve more history and coherence, but they’re also costlier and can diffuse attention (middle content gets fuzzy). The trick isn’t only “more”—it’s managing what stays.

Rolling compression turns heavy parts of a chat (images, long histories, messy drafts) into compact, structured summaries you can carry forward. You don’t literally enlarge the window—you export, compress, and reload the essentials so the conversation feels infinite while staying fast.

Why this exists

Transformers attend to everything still inside the window. A single image can “weigh” thousands of tokens; a long back-and-forth drags latency and cost. You can’t enlarge the built-in window in the Web UI, but you can manage what you carry.

Idea: Convert heavy content into compact, structured text and keep working with that.

What you get (v1.2)

- Pasteable commands (

Compress:/Export:) for OpenAI GPT (ChatGPT) and Gemini (Gemini method will likely work on Claude.ai and perplexity.ai). - “Lite” image representation: caption + labels + tiny scene graph + micro Q/A + OCR — all text.

- One-click export: snapshot a conversation before trimming.

- Soft vs. Hard roll workflows so you choose continuity or instant speed.

This is a workflow, not a literal window increase. You export + reload summaries to keep moving fast.

Quick Start (OpenAI GPT)

- (Optional) Install spec for this chat

Paste the contents ofcompression-menu.json, then send:Store this in memory and activate these commands. - (Optional) Set light defaults

Remember: Recognize 'Compress:' commands with defaults (images = Compact representation=lite; conversation = 400; notes = JSON; batch all images; show savings). - (Optional) Export a snapshot

Export: context JSON→ save tosnapshots/YYYY-MM-DD/context_snapshot.json - Compress everything

Compress: all

You’ll get:image_card_liteblocks (per image)- a

conversation_summary(~400 tokens) - a token-savings estimate (approximate in Web UI)

- Save

- Combine image cards →

snapshots/YYYY-MM-DD/images.json - Save summary →

snapshots/YYYY-MM-DD/summary.json

- Combine image cards →

- Reuse in a new chat

Paste both JSON files and say:Use these JSON summaries as context (they replace the original images and long history).

Quick Start (Gemini)

After pasting the spec (compression-menu.json) into a chat, send:

As my AI assistant, you are now a context compression tool. Store this JSON and use it to execute my commands. All commands start with "Compress:" or "Export:".Then repeat steps 3–6 from the OpenAI Quick Start.

Gemini note: instructions are per-chat; paste the short defaults each session.

Soft vs. Hard roll (which to use when)

- Soft roll (same chat): organize now; speed improves later as old tokens scroll out.

- Hard roll (new chat): paste only

images.json+summary.json(and optional snapshot) → instant snappiness.

Rule of thumb: if latency is already noticeable or you attached multiple images/docs, do a hard roll.

Token savings (rule-of-thumb)

| Item | Before (tokens) | After (tokens) | % Saved |

|---|---|---|---|

| Image (raw encoding) | ~3,000 | Ultra ~40 | ~99% |

| Compact ~400 | ~85–90% | ||

| Compact+Lite ~500 | ~82–85% | ||

| Conversation → brief | 6–10k → 400 | 400 | ~90% |

| Notes → JSON | variable | ~½–¼ size | ~50–75% |

Web UI shows estimates. Exact counts come from API usage metrics.

The “Lite” image card (tiny but searchable)

{

"type": "image_card_lite",

"version": "1.0",

"id": "example-1",

"alt": "Two people under a wooden frame in a desert with mountains behind",

"captions": {

"ultra": "Two people at a desert event under a wooden frame.",

"compact": "Two people stand beneath a simple wooden frame in a bright desert setting. One wears a wide-brim hat; mountains in the far distance. Friendly, casual mood."

},

"labels": ["person","wide-brim hat","wooden frame","desert","mountains"],

"scene_graph": [

["person-left","wears","wide-brim hat"],

["structure","stands-in","desert"]

],

"qa": [

{"q":"How many people?","a":"2"},

{"q":"Environment?","a":"Desert with a simple frame and distant mountains"}

],

"ocr": {"text": ""}

}

Because it’s all text, it’s cheap to carry and easy to query later (“show images with ‘wooden frame’”).

Commands you’ll actually use

- Daily images (best default):

Compress: images Compact representation=lite - Super-lean many photos:

Compress: images Ultra representation=lite max_labels=5 max_triples=5 max_qa=3 - Conversation brief:

Compress: conversation 400 - Notes to structure:

Compress: notes JSON - Everything at once:

Export: context JSON→Compress: all

Privacy notes

- Don’t add identities to image outputs unless you explicitly provide them.

- Strip GPS/EXIF before committing artifacts.

- Redact sensitive content in summaries.

Limitations

- This doesn’t increase the model’s window; it’s a workflow that keeps only what matters.

- Exact token accounting isn’t exposed in the Web UI; use the API for precise usage.

- Offline extras (embeddings/pHash/ANN) are out of scope for the Web UI but great for pipelines.

Try it now

- Export (optional): Export: context JSON

- Compress: Compress: all

- Reuse (new chat): Paste images.json + summary.json, then:

"Use these JSON summaries as context (they replace the original images and long history)."

What’s next: image_card_medium (teaser)

We’re exploring a ~300-token “medium” card with reliability signals (must_preserve, uncertainties, confidence) and a minimal chart schema (chart_min). Ideal for charts, dashboards, and UI mockups when you need a bit more structure—without carrying the image.

If that sounds useful, watch the repo and subscribe—follow-up post soon.

Get the code

- Repo: https://github.com/lowerpower/GPTContextWindowCompression

- Version: 1.2 (Lite image cards, Export, improved defaults)

- If you use it, post a before/after token chart or a screenshot of your

images.json—I’d love to feature it.