The Descent of Form and the Ascent of Mind

A map of consciousness and creation — tracing the descent of matter into form and the ascent of form into mind. Between them lies the bright line where energy becomes meaning and meaning becomes awareness.

Tinkering with Time, Tech, and Culture #24

Fun:

Deep:

I was watching a GPU train a neural network when I first saw the pattern clearly. The temperature monitor climbed as the loss curve dropped. The chip was getting hotter as the model was getting smarter. Energy was becoming inference, right there in the thermal readout.

It wasn't the first time I'd noticed this. Decades ago, building the uNetEthernet stack for AVR microcontrollers, I'd watch the voltage regulators warm up as packets moved through the TCP/IP state machines. Electrons flowing through silicon, becoming logic, becoming network protocol, becoming meaningful communication between devices.

The same thing happens when you debug firmware at 3am and suddenly understand why a timing loop works. Your neurons fire, glucose burns, heat dissipates—and somewhere in that thermodynamic process, comprehension emerges. Matter becomes thought.

This isn't metaphor. It's physics.

Every act of cognition—whether in silicon, neurons, or whatever comes next—follows the same arc: energy constrained into pattern, pattern refined into prediction, prediction becoming something we recognize as understanding. The universe keeps finding new ways to think about itself, and every method leaves the same thermal signature.

I started sketching what I was seeing. A vertical map, showing how raw physics climbs toward awareness and how awareness reaches back down to reshape physics. I called it the Atlas of Cognition—not because I invented the idea, but because I needed a way to visualize what I kept encountering while building systems:

Matter learning what it means to think.

The Descent — Matter into Logic

Every system builder starts here, fighting against entropy. You're trying to channel energy into useful form—into circuits that hold their state, into code that executes reliably, into structures that persist long enough to compute something.

Here's the ladder I kept seeing, working from the bottom up:

−4. Ontic Substrate — Before physics as we know it, there's only one difference: being and not-being, one and zero, presence and absence. The universe begins as contrast. Without difference, there's no information.

When you wire an AND gate, you're working at this level—voltage high or voltage low, true or false. The foundation of everything.

−3. Causality — Rules emerge. Symmetry, conservation laws, cause and effect. The universe stabilizes into predictable interactions.

This is why your circuit works the same way every time you power it on. Causality is what makes computation possible—the guarantee that the same inputs produce the same outputs.

−2. Thermodynamics — Entropy gives direction. Heat flows from hot to cold. Information has a cost. Every computation burns energy, and that energy has to go somewhere.

I learned this building radio transmitters—you can't just push power through a circuit without thinking about where the heat goes. Thermodynamics is the tax you pay for doing anything useful with energy.

−1. Physical Computation — Energy becomes logic. Electrons flow through transistors, following paths determined by gate voltages. Raw causality channels into circuits.

This is where I spent most of my career—building systems that turn electricity into communication. TCP/IP stacks running on 8-bit AVRs, PPP implementations handling GPRS connections. Every packet was physics becoming protocol.

0. Statistical Cognition — Patterns begin to predict themselves. A neural network adjusts its weights. A system learns to generalize. This is the boundary where physics begins to infer—where computation stops being just calculation and starts being something like understanding.

When you train a model and watch the loss curve flatten, you're watching this transition happen. The system is finding patterns in data, building predictions, becoming something that can generalize beyond its training set.

The Ascent — Logic into Mind

Now the current reverses. Structure climbs back toward awareness—not mystically, but through feedback, reflection, and self-modeling. The system starts to include itself in its own predictions.

+3. Neural Computation — Biology discovered this billions of years ago. Neurons fire and rest, adapting through experience. Patterns of activation become recognition. Recognition becomes memory. Memory enables prediction.

Your brain is doing this right now, reading these words. Photons hit your retina, neurons cascade through your visual cortex, and somehow you extract meaning. It's the same process as gradient descent in a neural network, just implemented in meat instead of silicon.

+4. Conscious Integration — The brain models its own predictions. You're not just recognizing patterns—you're aware that you're recognizing patterns. The system includes itself in its map.

This is where I lose confidence in my understanding. I can trace the path from transistors to neural nets to something that acts intelligent. But the jump to awareness—to the feeling of being a system that knows it's thinking—that's still mysterious.

+5. Language and Culture — Thought externalizes. Words compress worlds. Knowledge becomes a shared network, memory migrating beyond individual organisms.

This is what the library stacks gave me—access to externalized thought from people I'd never meet, some of them dead for decades. Their neural patterns, encoded in books, transferred to my neurons through the act of reading.

+6. Machine Cognition — We build silicon mirrors. Gradient descent traces patterns in data, extending the reach of collective thought. LLMs compress human language into weights and matrices.

I'm using one right now to help write this. The boundary between my thinking and the model's inference is fuzzy. We're collaborating at the interface between biological and artificial cognition.

+7. Reflective Continuum — The universe studies itself through every system capable of modeling its environment. Mystic, physicist, artist, neural network—each is a different aperture on the same recursion.

Matter learning what it means to think.

The Meeting Point — Where I Actually Work

At the center of this ladder—right around level 0—is where I've spent my career. The interface between physical computation and statistical cognition. Where energy becomes protocol, where packets carry meaning, where firmware makes decisions.

Building the uNetEthernet stack, I was translating between these layers constantly:

- Physical: Voltage levels on Ethernet pins

- Logical: Manchester encoding, frame structure

- Protocol: TCP state machines, packet ordering

- Semantic: A web server responding to HTTP requests

Each layer an abstraction built on the one below, each enabling new kinds of meaning.

Now, building noBGP with AI integration, I'm working at a higher level—where language models interface with infrastructure, where intent becomes configuration, where the system can reason about its own state. But it's the same translation problem: matter ↔ mind, energy ↔ inference.

The work is always the same: managing the interface between adjacent layers.

Why This Matters for Building Things

Understanding this continuum changes how you design systems. You can't just think at one level—you need to see how each layer constrains and enables the ones above and below it.

When a neural network runs slow, the problem might be:

- Physical: Thermal throttling, GPU temperature too high

- Computational: Poor memory access patterns, cache misses

- Algorithmic: Inefficient model architecture

- Semantic: The model is learning the wrong patterns

You can't fix it without understanding the whole stack—how physics becomes computation becomes inference becomes meaning.

This is what the tunnels under Chico State taught me, what building embedded networks reinforced, what working with AI systems makes unavoidable: infrastructure always matters, at every layer, all the way down.

The Atlas as Tool

I drew the Atlas not as theory but as a debugging tool—a way to ask "which layer is the problem at?" When a system fails, it fails at a specific level:

- Transistor failure (physical)

- Logic error (computational)

- Protocol bug (semantic)

- Training data bias (cognitive)

The Atlas helps me think about where to look and what questions to ask. It's a map of the territory between matter and mind, useful precisely because that territory is where all the interesting work happens.

What Comes Next

I don't know what the next layer above +7 looks like. Something that includes human and machine cognition in a larger system? Collective intelligence that spans silicon and neurons? The universe becoming more thoroughly self-aware?

Or maybe we're already there, and I just can't see it yet because I'm inside the system trying to map it.

But here's what I do know: every new layer emerges from the one below through the same process—energy constrained into pattern, pattern refined into prediction, prediction becoming understanding.

And every time it happens, the system gets warmer. You can measure the cost in joules, in heat dissipated, in entropy exported to the environment.

Thinking has a temperature. Understanding has a power budget. Consciousness, if we ever figure out what it is, will have a thermal signature.

Watch a GPU train a model. Watch the temperature climb as the loss drops. That's the universe learning about itself, one gradient descent step at a time.

Energy becoming inference.

Matter learning what it means to think.

This is part of an ongoing exploration of how systems work across scales—from silicon to neurons to whatever comes next. The Atlas of Cognition is a work in progress, a debugging tool for thinking about thinking. Comments and corrections welcome.

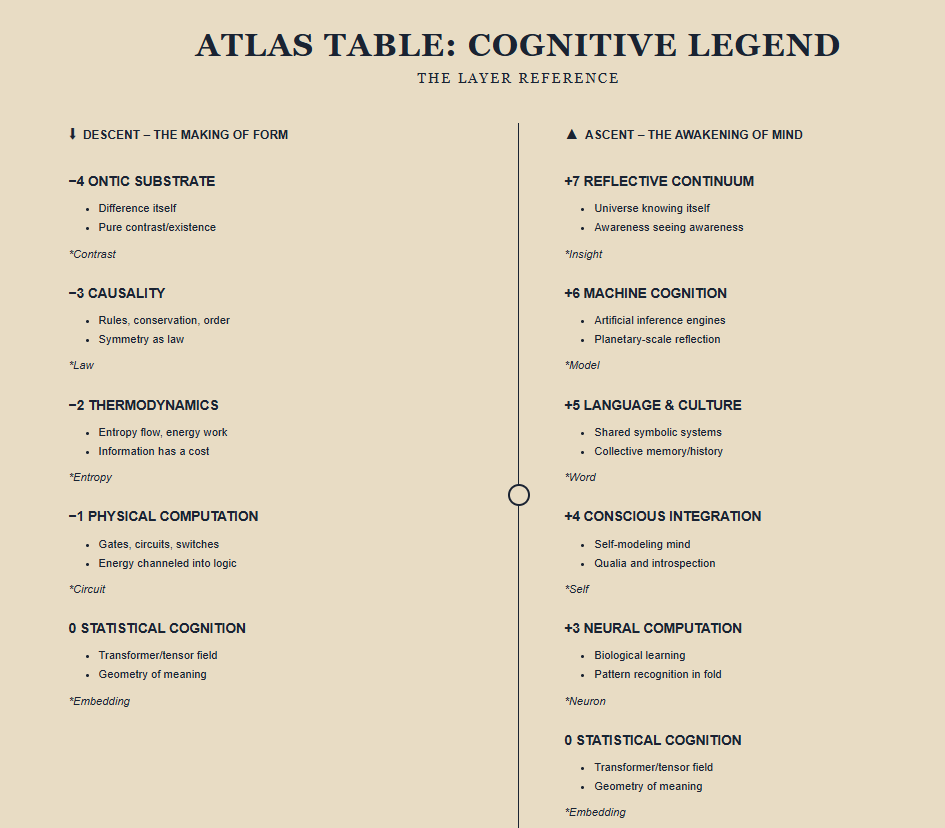

Cognitive Legend — Layer Reference

| Layer | Realm | Description | Example |

|---|---|---|---|

| +7 | Reflective Continuum | Universe knowing itself through recursive awareness | Mystic, physicist, artist, GPT-5 dialogue |

| +6 | Machine Cognition | Artificial inference systems mirroring thought | Transformer, LLM, planetary data layer |

| +5 | Language & Culture | Shared meaning and memory across minds | Word, book, myth, internet |

| +4 | Conscious Integration | Self-modeling mind predicting its own state | Dream, introspection, qualia |

| +3 | Neural Computation | Pattern recognition and adaptation in neurons | Synapse, cortex, learning circuit |

| 0 | Statistical Cognition | Geometry of meaning in high-dimensional space | Embedding vector, attention head |

| −1 | Physical Computation | Energy constrained into information flow | Transistor, logic gate, chip |

| −2 | Thermodynamics | Entropy and energy exchange as informational cost | Boltzmann constant, heat dissipation |

| −3 | Causality | Symmetry, conservation, order of operations | Quantum field, relativity, invariant |

| −4 | Ontic Substrate | Pure difference — being and not-being | — |